Tackling a complex CRM data migration for Gresham House

Gresham House, a specialist alternative asset management company, first met Redox when it acquired FIM in 2018. Redox had been supporting the forestry investment business since 2006, having developed its bespoke Line of Business (LoB) system called Forest.

The team at Gresham House liked Forest. They wanted to develop it throughout the organisation, replacing their existing CRM system.

Looking to acquire several businesses at the time, Gresham House saw that a new “Forest” system could replace CRMs in other purchases too.

We first built a new CRM system called SAM for Gresham House. That was one thing, but successfully migrating all the data into it was another. Not a software challenge for the faint-hearted.

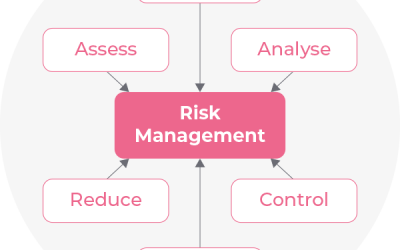

Identifying the migration challenges.

With data coming from several sources, it soon became clear there were many complications to overcome if we were to deliver a seamless migration.

Stored on various platforms (including Salesforce and HubSpot) the data included company information, contact details, communication logs, and deal information. We could access it as comma-separated value (CSV) files.

So far, so good. However, several migration issues soon arose.

To maintain data integrity, links from the importing records to the correct records in the new CRM system must exist. Each had a unique identifier provided by the source CRM. Following import, the record would gain a new identifier. Yet, historical information, such as deal information and communication logs, had to remain traceable.

With so many data sources, the risk of duplication was high. We had to decide how to deal with this issue. Plus, some of the importing records already existed in SAM. Which record should we prioritise?

A few of the source CRM systems held notes in text files. This posed an added complication. We’d need to extract the text files and maintain a link to the correct records.

The different platforms had incompatible field names. And custom fields (not relevant to the entire organisation) held specific data for the acquired companies.

Encryption added another layer of difficulty. The database behind SAM is Microsoft SQL Server. In line with GDPR data protection advice from the Information Commissioners Office (ICO), we encrypt the fields using the databases native encryption method, Always Encrypted.

Finally, migration speed was crucial. Platform licences were soon terminating, so we had a short window to avoid Gresham House enduring the cost of extending them.

Data at a glance

Already in SAM CRM:

- 5,399 companies

- 18,807 contacts

Data to import:

- 12,108 companies (59% new companies)

- 23,436 contacts (58% new contacts)

Creating custom code.

While not simple, the answer was obvious: Gresham House needed custom code. This would ensure the data imported successfully, overcoming all the challenges we’d identified.

The first stage of the process was to perform an initial validation check on the data. We needed to make sure it matched the data formats expected. Also, our validation check assessed any look-up values in use to check if they matched existing entries. It also mapped them if they were close to existing entries, and created new ones if they didn’t exist.

Having passed the validation phase, we moved onto testing our custom code. Initially, we used a single developer machine to complete some test imports, disposing of the data every time.

Once we were confident, we deployed the code on a single test machine at Gresham House, again disposing of all test datasets.

Testing complete, and with the code signed off by Gresham House, we prepared for the live import. Backing up all data, we switched off SAM and arranged to complete the migration out of office hours.

Including all preparation and testing, the entire process took no longer than 20 minutes. Once complete, we switched SAM on again and everything was ready for the next working day.

Overcoming the migration challenges.

So, how did we overcome the various complications? Here’s the detail, should you want to understand.

Maintaining data integrity

Within the main database, we created a table to store all IDs from Salesforce, HubSpot, and SAM. As we imported data, this table populated with the IDs and linked to the relevant records.

Data duplication

Collaborating with the team at Gresham House, we agreed to import one dataset at a time. We then exported the data from SAM into a CSV file, along with all the data to import. Putting this through a de-duplication tool, we highlighted any issues. Gresham House then reviewed the issues themselves as they knew the data.

We agreed with Gresham House that, when the record already existed, we’d take the SAM record as the master.

Text file notes

As part of the imported dataset, all notes files had a corresponding CSV file with an ID attached to it. This provided a link to the correct contact or deal. By reading the CSV, we could locate the correct note, extract the content, and create a record in the SAM database.

Mapping different field names

This had to be a manual process involving holding tables within the database. Once imported into the holding tables we were able to transfer each field of data to the appropriate table in SAM. We hard-coded these mappings into our import code.

Importing to Always Encrypted data fields

Always Encrypted database fields mean you can have data encrypted at rest. “At rest” refers to data stored on a stable medium, like a hard drive or backup tape, that’s not currently being processed or transmitted. Should a data breach occur, this encryption ensures the stored data remains protected, safeguarding its contents.

The challenge arises because you can only transfer data between encrypted fields when the encryption type matches. In this instance, we had to transfer plain text values (data from the CSVs) into the encrypted fields. You can’t do that without using an encryption certificate. Performing this one record at a time would take a long time, increasing the project costs.

We therefore created holding tables in the database that had fields with the appropriate encryption types. We then took the data from the CSVs and stored it in a data model within the application.

Connecting to the database using the encryption certificate, we then used a .NET class called SqlBulkCopy. This allowed us to copy the data model in bulk into the holding table. From that point, we could use SQL Server to transfer the data from the holding table to the final destination tables.

This complexity alone highlighted why writing custom code was the only answer for Gresham’s data migration.

Migrating at speed

From start to finish we ensured a dedicated team on the project. This kept coding, testing, and migration moving at speed. In fact, once we had the code and our test scripts in place, we could complete data migration from subsequent acquisitions within 48 hours.

Ensuring the right team for challenging data migrations.

Having an integrated and fully functioning CRM system is crucial to the performance of any business. For those with ambitious growth targets involving acquisition, data migration challenges will appear.

Finding the right partner to collaborate with can mean the difference between days of costly downtime and a seamless transition. Gresham House benefited from a proven partnership with Redox. The company also knows who to turn to as it acquires further businesses in the future.

Recent Posts

- The real cost of offshoring: Why UK companies are bringing software development back home

- Of heartstrings and algorithms: Music in the age of AI

- The heart-in-a-bucket problem: Why AI fails in organisations

- From a nudge to a sledgehammer: How AI’s gloves are off

- The hidden costs of poor UX: Why software interfaces matter more than you think